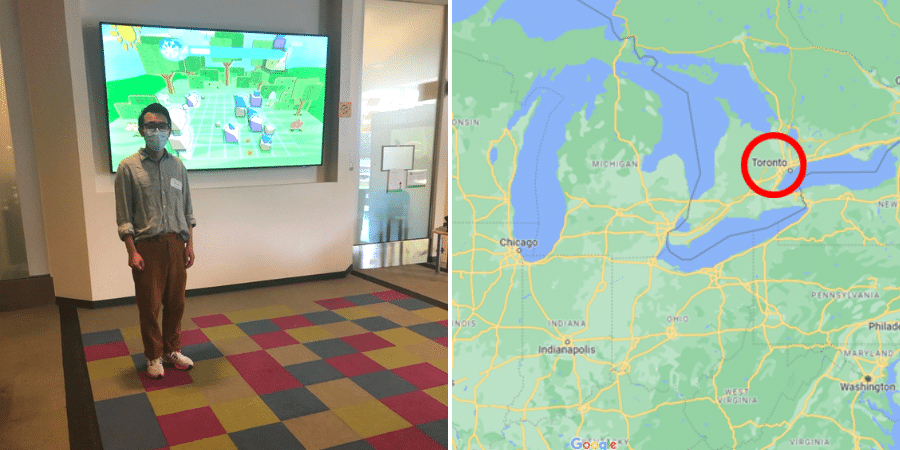

Above image, left: Darryl standing on a touch sensitive carpet. This technology is to train children to be still and to relieve stress. Stepping on a tile would cause a rock to appear on the screen, and staying on it would cause the rock to grow and form different creative shapes. This would encourage children to stay relaxed and comfortable, and was often used when waiting for therapy.

Above image, right: Location of the Holland Bloorview Research Institute

Article written by CPA Research Assistant, Darryl Chiu

In July 2022 I had the opportunity to visit and tour the Holland Bloorview Research Institute (BRI) in Toronto, Canada. The Research Institute is part of the Holland Bloorview Kids Rehabilitation Hospital, Canada’s largest paediatric rehabilitation hospital. The institute is renowned for its client focused and family centred research in childhood disability.

I was given a tour of the building by Fanny Hotze, a paediatric assistive technology specialist with a master’s degree in physics and electronic engineering. She is a researcher working with the Paediatric Rehabilitation Intelligent Systems Multidisciplinary (PRISM) Lab. This group is a multidisciplinary team of individuals focused on improving the lives of children with disabilities and special needs through applied science and engineering.

The lab had recently been refurbished (with some rooms still being renovated), and so each room had shiny new equipment to facilitate cutting edge research. Their lab contained areas such as a soldering station (for electronics), a workbench for altering prosthetics, and a fume cupboard (see images below).

Above: fume cupboard (left); workbench (centre); soldering station (right)

Aside from the lab, there are rooms dedicated to the study and use of brain computer interfaces (BCI). These are machines that can read and interpret the brain signals of a user. They have the potential to be used for communication, without the need for verbal or physical gestures. Each room was dedicated to a different type of BCI, depending on the type of signal being measured. The room below (see below) was used for BCIs that read electroencephalogram (EEG) signals, which are electrical signals from the brain that can change depending on an external stimulus.

Above: BCI room, consisting of a BCI that reads EEG (electroencephalogram) brain signals. This is then connected to a computer which can read and interpret the signals.

It was incredibly exciting to see all the new equipment and technology being used for disability research. After the tour, the team showed me a demonstration of one of their ongoing projects, which is being conducted in collaboration with the Cerebral Palsy Alliance.

Switch-App

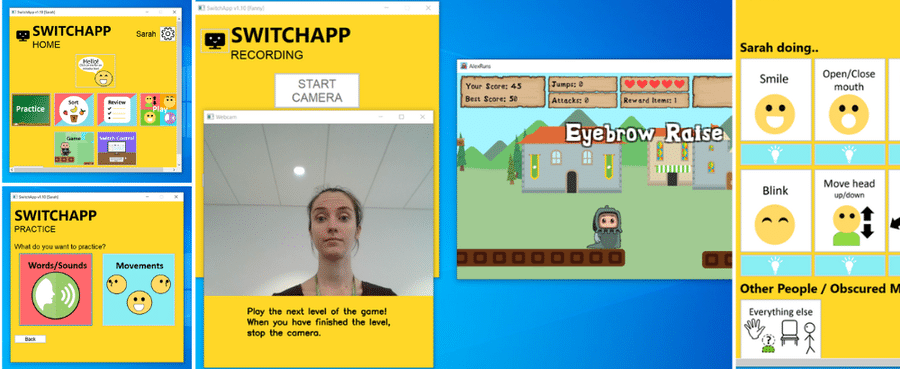

The team in Canada are currently working on a project called Switch-App, a software for recognising sound or facial movements from a user to then control a program. Switch-App can recognise facial movements (such as smiling or raising an eyebrow) and sounds/key phrases (such as a simple yes or no) through a camera and microphone. These movements and sounds can then be used as commands for controlling a computer or communication board. This software is extremely beneficial for children with disabilities as it provides a personalised control system for a computer/tablet. Switch-App is an AI based program that can learn a person’s specific movement and keywords, so that it can be used easily by the user in a home environment.

To test the usability and performance of the Switch-App, the software will be used to test if children with cerebral palsy are able to play a custom video game by using facial movements and/or keywords as the controls. The technology will be then tested in a home setting to see if children with cerebral palsy can use the Switch-App to access their usual communication and/or writing software.

As a demonstration, I got to test out the Switch-App to see if I could play a video game with just using facial movements as my controls for the games. The first step is for the software to recognise my facial movements. To do that, click practice, and choose which facial movement/sound you are choosing to practice. I had to practice smiling in front of the camera during the game so that it would be able to record and analyse my smile at the same time when the game is playing. The game is a simple jumping game, where you control a character that needs to jump at certain times to get to the end of the level. The game would tell me to smile whenever I needed to jump, so that it would be able to map me smiling to when the jump control needed to be pressed. The software needs around 30-50 recordings of me smiling so that it can properly recognise and analyse my facial movements.

Above: Home screen of the Switch-App software (top left); The software can recognise words/sounds and facial movements by recording your movements/vocalisations via a camera and microphone (bottom left); When practicing, the game will prompt you for the facial movement or sound you are training for whenever you are required to jump in game. Here, the software is prompting the user to raise their eyebrows and press the jump button (spacebar) so that it is able to train itself to recognise that facial movement for the command (middle); Sorting each video recording into different facial movements. If just practicing smiling, I was mostly sorting the video into whether I was smiling at the time, or into the no movement category (right).

After recording, I would have to sort the recordings into whether I was smiling or not at that instance. The software would play a section of the recording, and I would have to choose what type of facial movement it was at the time. This would train the software in being able to recognise what facial movement was being displayed at the time.

After sorting, you could choose to review the sorting labels before playing the game. Once the game was launched, you could set the controls to the facial movement or sound of your choosing (if you had trained different inputs). The game worked extremely well, and I was able to get my in-game character to jump just by smiling at the camera! The software was very easy to use, and the research team were very clear in their instructions in how to use it. It will be exciting to see how this software is used for children with cerebral palsy.